vMotion took the industry by storm when introduced alongside the very first releases of ESX. I remember being blown away by the idea of a live migration when I first started my virtualisation career.

We’ve taken vMotion for granted for a few years now, but how is VMware improving it? You may have missed significant innovations in vSphere 7.0 U1, released in October 2020.

vSphere 7.0 Update 1

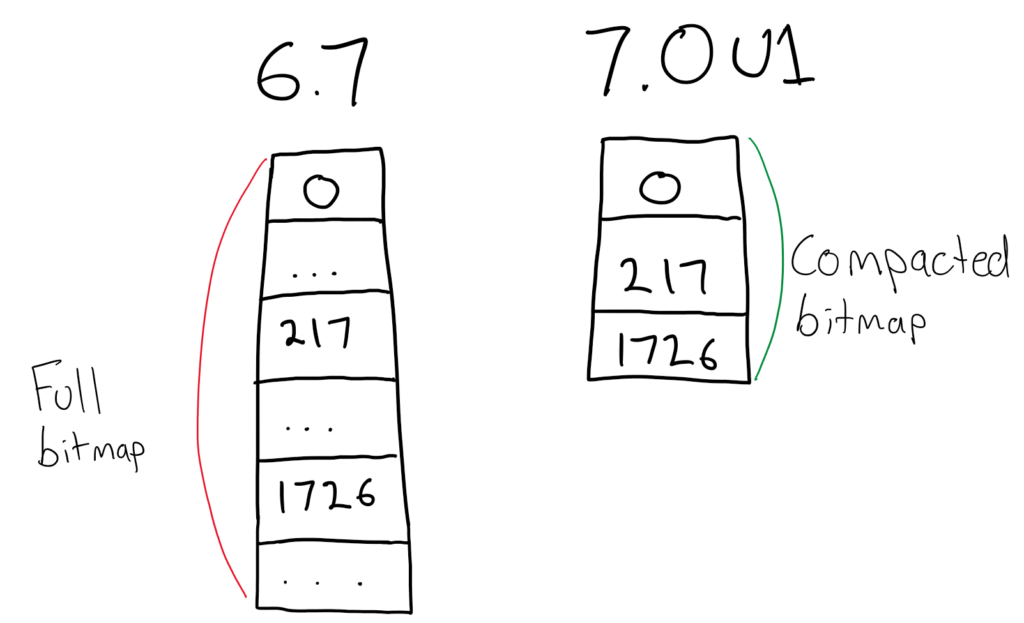

Significant enhancements were made to migrations of ‘monster’ virtual machines, typically those beyond 64 vCPU and 512GB RAM. This was done through a total rewrite of the memory pre-copy technology with a new page-tracing feature.

To summarise, previous iterations of vSphere vMotion would require stopping all vCPUs from executing any guest instructions while a trace is assigned to the VM (stop-based trace). This was particularly problematic for large virtual machines, which in vSphere 6.7 could be up to 6TB / 256 vCPU – these machines could take up to hundreds of seconds for the stop based trace process to run.

With vSphere 7.0 Update 1, the stop-based trace was replaced with a process called loose page-trace. This allows vSphere to only need to stop a single CPU for trace setup, allowing for a significantly shorter stun period. For large virtual machines, this can provide significantly reduced vMotion time.

To read more, head over to the vMotion Performance Study

vSphere 7.0 Update 2

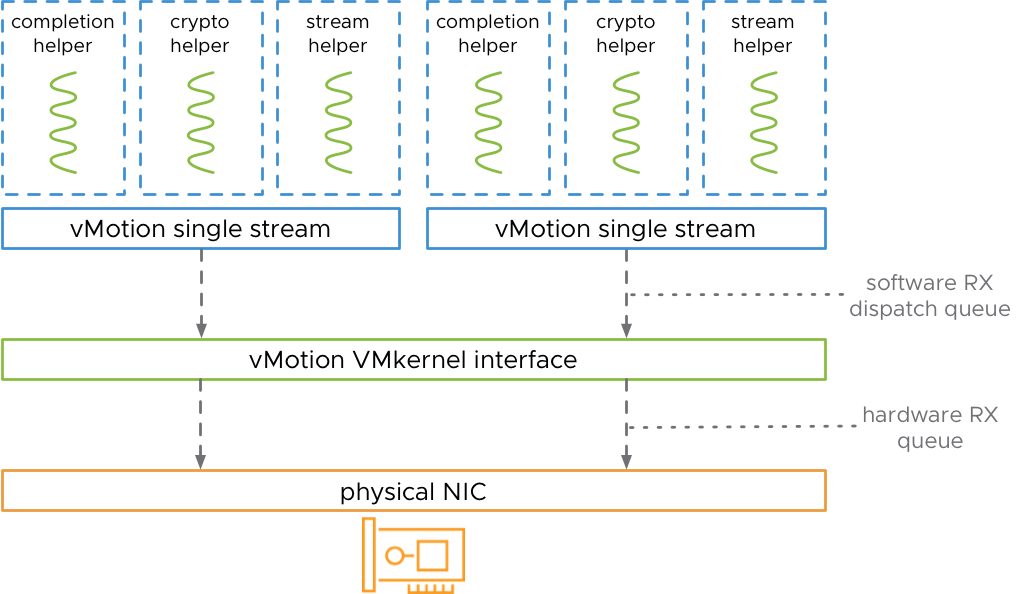

Now to the point of this blog post. Starting with vSphere 7.0 Update 2, we can now fully saturate high bandwidth NICs, all the way up to 100 GbE.

This was previously available if you took the time to dig into the advanced settings, but this now comes out of the box. vSphere will now automatically create multiple streams, based on the available bandwidth of the underlying physical network used for the vMotion vmkernel interface(s).

For more information on all things vMotion, be sure to check out core.vmware.com/vmotion